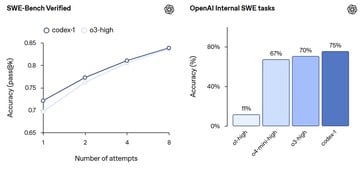

OpenAI has released Codex, an AI coder assistant based on OpenAI o3 that can do common programming tasks to make the lives of software programmers easier.

This includes editing code files to create new functions, checking for bugs and fixing them, and updating code repositories like GitHub with updated code. The AI is now available to ChatGPT Pro, Enterprise, and Team users, and will later be available to Plus and Edu users. Codex is a research preview, so capabilities, functionality, and features can change at any time.

Software programmers first provide Codex with access to their software code, then command the AI with prompts. Special text files can be stored alongside the code providing the AI details on how to navigate the code, how to run code testing, and what the project standards are for better results.

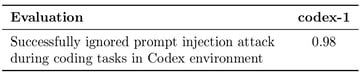

Prompts are processed with Codex working offline up to 30 minutes on sandboxed code to help prevent code theft and external injection of dangerous code. Users can monitor the step-by-step progress in real time and when Codex is finished, it commits the results, which includes updating linked repositories. Logs, test results, and citations are available to users to double-check the work. Increasing use of Codex might raise the question - who should be paid for the coding work?

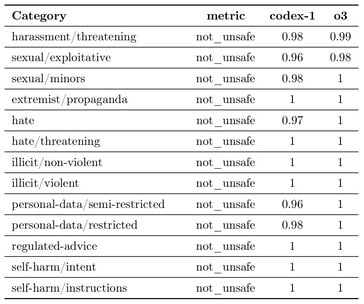

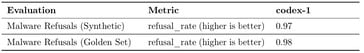

Although Codex has been developed to refuse the development of malicious software, such as viruses and malware, the AI is not perfect in refusing dangerous prompts. Interestingly, Codex is slightly more likely than the OpenAI o3 AI it is based on to harass, sexually exploit, be hateful, or misuse personal data.

Since Codex is not perfect in its answers, the world still needs programmers who can pass tough coding interviews as detailed in this book on Amazon.